Have you ever stared down a legacy application and thought, “Man, if only I could get rid of all this infrastructure hassle”? You’re not alone. Picture this: your old reliable app—maybe built in Java or .NET—running on dusty VMs, servers patched only when the moon is full, and every deployment feels like defusing a bomb. What if you could offload all that to a cloud provider and pay only for what you use? Enter serverless architecture, the modern-day fairy godmother for legacy systems.

Why Serverless for Legacy?

Serverless means you focus on writing functions or small services, and your cloud provider handles servers, scaling, and most of the ops. Instead of provisioning VMs or containers, you write discrete units of logic—lambdas, functions, or cloud run services—and they fire on demand. For a legacy app, this can be a godsend:

1. No More Over-Provisioning

Remember the time you spun up ten servers “just in case” traffic spiked, only to have them sit idle most nights? With serverless, you only pay when your code executes. Idle time costs you zero—now that’s a budget-friendly party trick.

2. Effortless Scaling

Legacy apps often hit performance walls under sudden load. Serverless components auto- scale in milliseconds. Spike from 10 to 10,000 requests? No sweat. The cloud transparently provisions the capacity, then shrinks back down when the party’s over— kind of like a bouncy house that deflates itself.

3. Reduced Ops Burden

Patching, OS upgrades, load balancers—oh my! Serverless relegates most of that to your provider. You get to sleep better at night knowing someone else handles heart-stopping patch cycles.

Step-by-Step: Migrating to Serverless

Step 1: Identify Candidate Functionality

Break your legacy monolith into logical units: image processing, report generation, authentication, email notifications. I once worked on a PHP app that generated PDF invoices. We isolated that billing logic—every time an invoice was requested, it triggered a serverless function. Billing ran in its own sandbox, freeing up the main app.

Step 2: Wrap Legacy Code

Not ready to rewrite your whole stack? No problem. You can wrap existing code in a function. On AWS, for example, you can package .NET binaries into a Lambda or spin up a container image for Cloud Run if your code has heavy dependencies. When our team first did this, we literally zipped up our invoice service, uploaded it, and—voilà—even the ancient legacy libraries fired fine in the cloud.

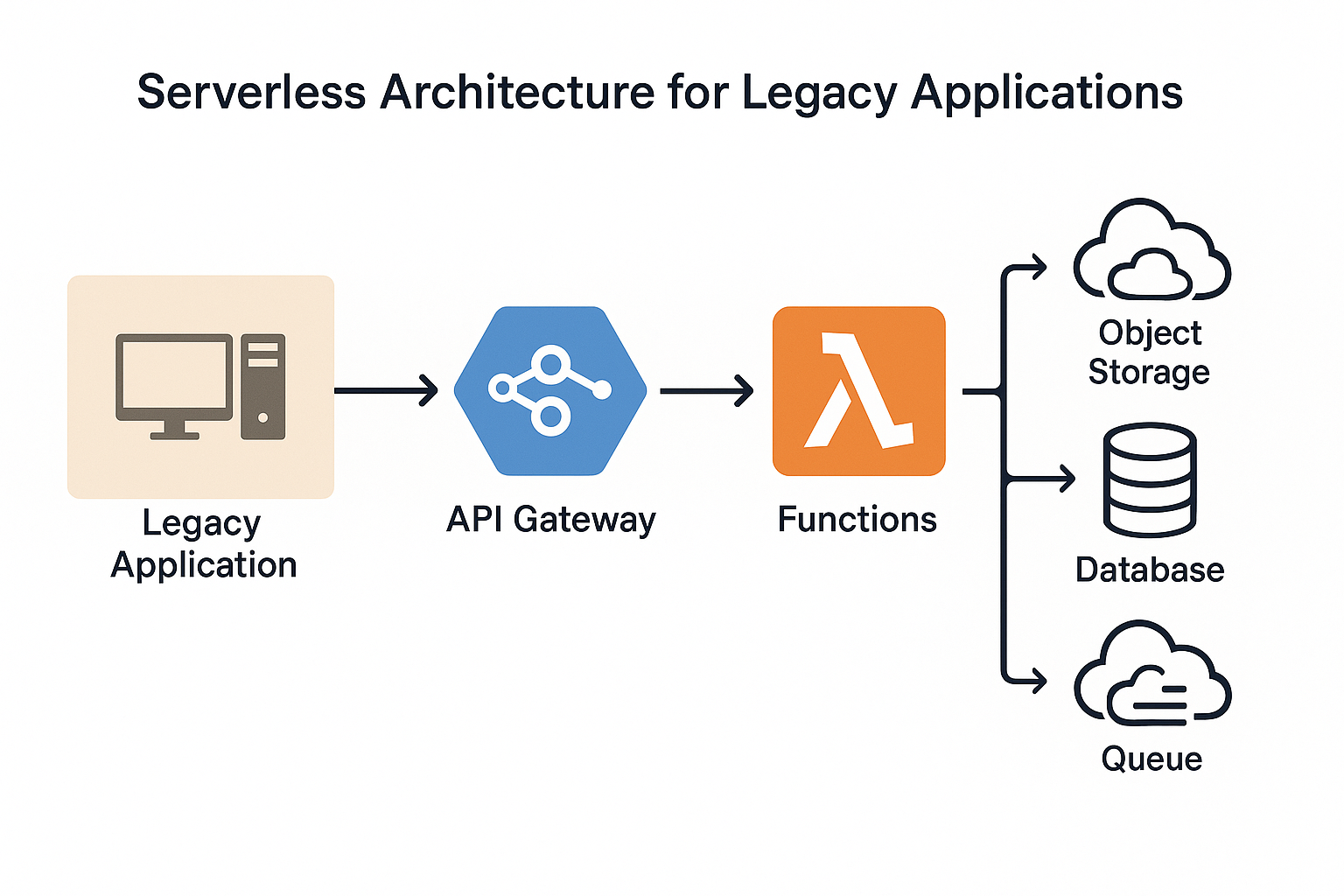

Step 3: Decouple via Messaging

To glue the monolith and serverless world together, introduce a message bus: SNS/SQS on AWS, Pub/Sub on GCP, or Event Grid on Azure. For instance, the monolith publishes an “invoiceRequested” event to a queue; the serverless invoice function picks it up, processes the PDF, and then pushes the result back or stores it in S3. This decoupling makes both sides happier: the monolith can keep chugging along, and serverless functions can scale independently.

Step 4: Secure and Configure

Serverless security hinges on least-privilege permissions. Don’t give your functions “god mode”—grant only the specific roles they need (like reading from your DB, writing to storage, or publishing events). When we first moved our notification logic to serverless, we accidentally gave it full database access. Oops—lesson learned quickly!

Step 5: Monitor and Optimize

Use built-in monitoring—CloudWatch, Stackdriver, or Application Insights—to track cold starts, invocation times, and errors. At first, our cold starts spiked to 1.2 seconds every few hours, causing a laggy user experience. We added a tiny “keep-alive” ping on a schedule to keep functions warm, and those occasional waits vanished.

Anecdotes & Insights

- The Cold-Start Shock: I’ll never forget demoing a cool serverless feature in front of execs at 9 a.m. We hit a cold start, and our image-resizing function took over two seconds to Cue awkward silence. Now we architect with minimum concurrency and “keep-alive” strategies built-in.

- From 10 VMs to Zero: Our team once retired a cluster of 10 Linux VMs that handled thumbnail generation. Migrating to a serverless function cut our monthly infra bill by 70%—our finance lead still high-fives me every quarter.

- Function Spaghetti: Beware of over-decomposing; you don’t want fifty tiny functions calling each other in an unreadable Group related logic into cohesive functions, or use orchestrators like Step Functions on AWS or Durable Functions on Azure to manage complex flows.

Overcoming Common Hurdles

- Vendor Lock-In Fears: Yes, serverless offerings Mitigate by using open-source frameworks like the Serverless Framework or Knative for portability.

- Debugging Nightmares: Debugging distributed functions can feel like chasing Embrace structured logging and correlation IDs early so you can trace a request across services.

- Latency Spikes: Network hops add latency. Keep high-throughput or latency-sensitive paths in your monolith or a containerized service, and move batch or non-critical paths to

- Testing Complexity: Local testing of serverless can be Use emulators (SAM CLI, Functions Framework) and write plenty of unit tests before deploying to the cloud.

Conclusion

Converting your legacy app to serverless isn’t a one-click magic trick—it’s an iterative journey of wrapping, decoupling, and fine-tuning. But the rewards are sweet: no more over-provisioned servers, effortless scaling under load, and a dramatic drop in ops headaches. Ready to lift your dusty VMs into cloud functions? Pick one small piece, spin up that first function, and let the serverless revolution begin!