Ever watched your monolith grind to a halt when a batch job kicked off, or seen your services choke waiting for synchronous calls to complete? I have—imagine a sudden surge of orders on your e-commerce site, each service calling the next in a domino chain until everything times out. That’s when I discovered Event-Driven Architecture (EDA)—a way to decouple components so they communicate via asynchronous events, letting your system stay responsive under load. Ready to see how emitting, listening, and reacting to events can turn your apps into nimble, resilient ecosystems? Let’s dive in.

What Is Event-Driven Architecture?

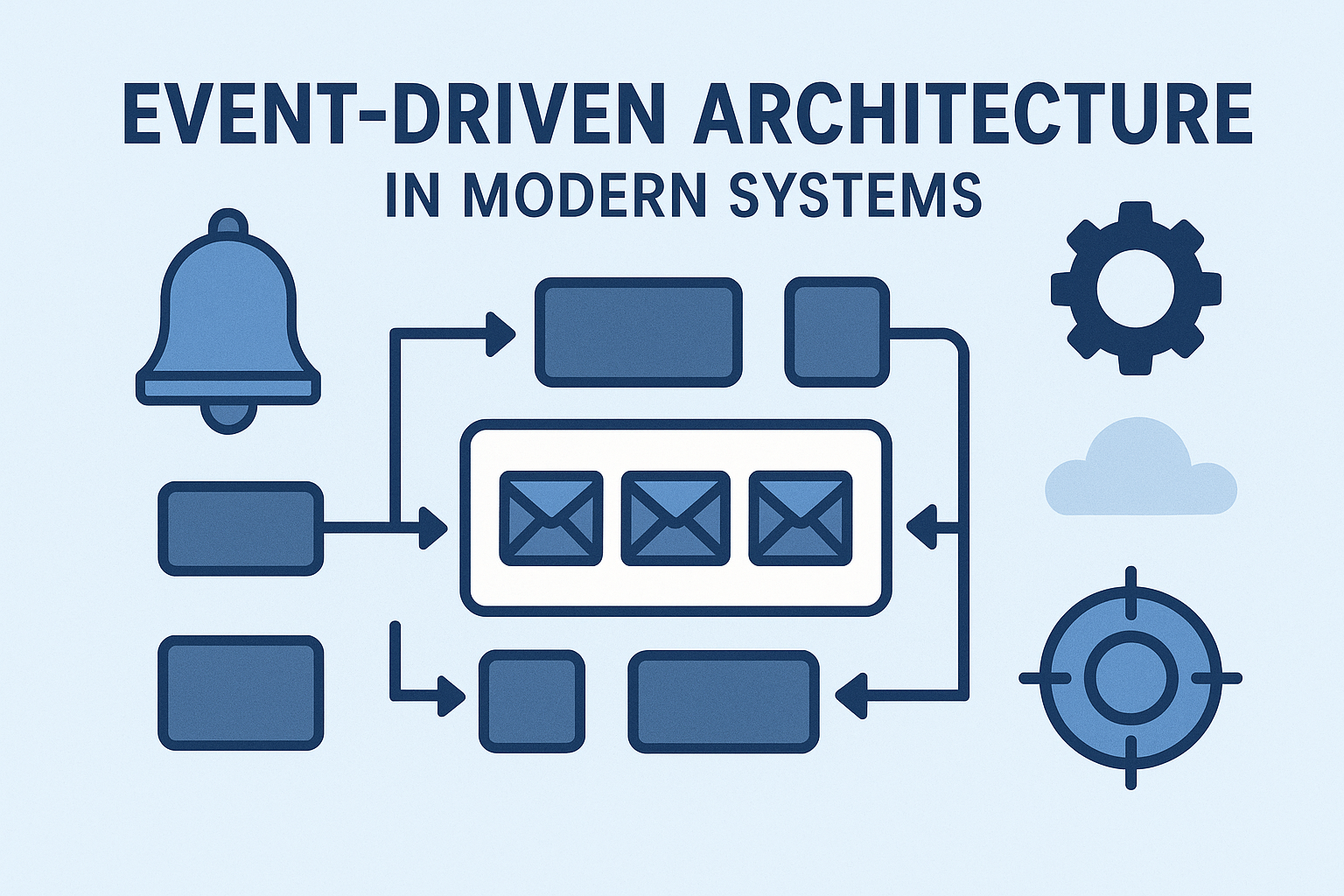

Event-Driven Architecture is a design paradigm where services—or event producers—emit messages whenever something noteworthy happens (an “event”), and other services—or event consumers—react to those events independently, whenever they’re ready. Unlike request- response models that block the caller until the callee responds, EDA offloads work into event streams, enabling:

Loose Coupling: Producers know nothing about who listens; they simply publish

Resilience: If a consumer is down, events can queue up for later

Scalability: You can independently scale producers, brokers, and

Flexibility: New consumers can join the party by subscribing to event topics without touching existing code.

Picture your system as a bustling marketplace: rather than shouting orders down a straight line of workers, you post notices on a community board (“OrderPlaced—ID 12345”), and any shop— inventory, billing, shipping—picks up the notice asynchronously when it’s free. No more bottlenecks; every service marches to its own drumbeat.

Implementing EDA—Step by Step (With Anecdotes)

Step 1: Identify Key Events

Begin by mapping out your domain events—the things your system cares about. Examples include UserRegistered, PaymentProcessed, OrderShipped, or InventoryLow. I once worked on a retail platform and realized our “order workflow” emitted only HTTP callbacks, causing cascading delays. We redefined five core events—OrderCreated, PaymentCompleted, InventoryReserved, OrderPacked, OrderDelivered—and it felt like we’d cracked the code on responsiveness.

Step 2: Choose an Event Broker

Select a messaging backbone that fits your scale and needs:

Lightweight: RabbitMQ or Redis Streams for moderate throughput and simple routing.

High-Scale: Apache Kafka or AWS Kinesis for millions of events per second, durable logs, and replayability.

Cloud-Native: AWS EventBridge or GCP Pub/Sub for managed scaling and integrations.

On one project, we started with RabbitMQ and hit limits during Black Friday; migrating to Kafka let us horizontally scale partitions and replay missed events when a consumer crashed— total game-changer.

Step 3: Define Event Schemas

Use a schema registry (Avro, Protobuf, JSON Schema) to version and validate events. This prevents “schema drift” where producers and consumers disagree on field names or types. We learned this the hard way: our OrderCreated event once misspelled customerId as custId, causing downstream consumers to stall. A schema registry with enforced compatibility rules would’ve saved us a day of debugging.

Step 4: Build Producers and Consumers

Producers: Instrument code to publish events at transaction For example, after committing an order to the database, emit OrderCreated with order details.

Consumers: Subscribe to relevant topics, process events idempotently (so replays don’t duplicate work), and emit their own events (InventoryReserved → OrderProcessed).

I recall our shipping service consumer once processed an OrderCreated event twice (because of at-least-once delivery) and dispatched two trucks for the same order. We solved it by checking a “processedEvents” table before acting, making our consumer safe to replay.

Step 5: Handle Failure and Retries

Implement retry policies, dead-letter queues, and exponential backoff. If a consumer fails to process an event—say, due to a transient database outage—retry a few times, then push the event to a dead-letter topic for manual inspection. One time, our payment gateway was down temporarily; our dead-letter queue let us resume processing exactly where we left off once the gateway recovered.

Step 6: Monitor, Trace, and Evolve

Use distributed tracing (OpenTelemetry, Jaeger) to visualize event flows across services, and gather metrics on event lag, error rates, and consumer lag. In our setup, dashboards showing consumer lag alerted us immediately when one new microservice fell behind during a traffic spike—allowing us to spin up extra instances before users noticed any slowdowns.

Overcoming Common Hurdles

Event Storms: If you blindly publish everything, you can overwhelm consumers. Combat this with event filtering, or split topics so consumers only subscribe to what they

Data Consistency: Asynchronous processing means eventual consistency, not immediate. Design UIs and APIs to handle “in-progress” states gracefully, showing loaders or status messages.

Schema Evolution: Changing event formats risks breaking old consumers. Enforce backward compatibility in your schema registry and version major changes

Operational Complexity: More moving parts—brokers, registry, multiple services— means more to Automate deployments with IaC, and centralize observability so you’re not chasing logs across ten clusters.

Conclusion

Event-Driven Architecture transforms your system from a brittle line of dominoes into a vibrant, decoupled mesh of event producers and consumers. By identifying your key events, choosing the right broker, defining solid schemas, handling failures gracefully, and investing in observability, you’ll build applications that scale, adapt, and recover with ease. Ready to flip the switch on events? Sketch your first event schema, spin up a broker in minutes, and let your services start chatting asynchronously. Your system—and your future self—will thank you!